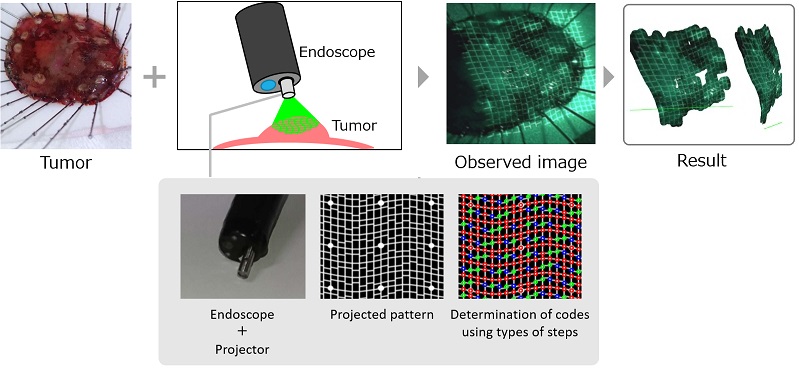

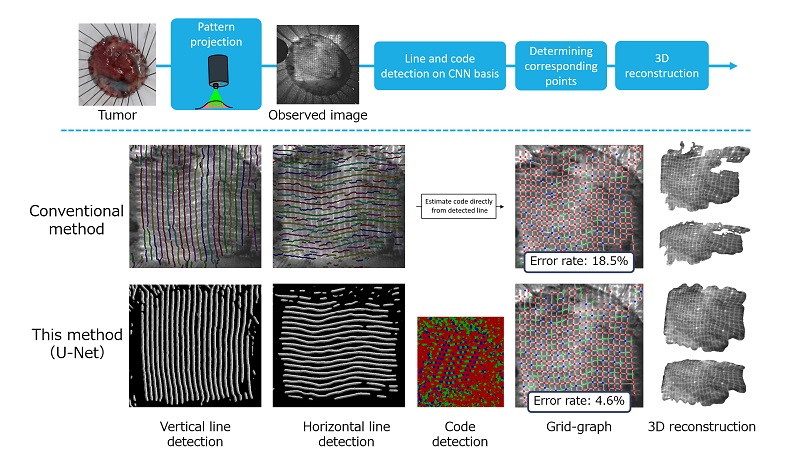

Measuring the size of tumor and the like is required in medical examination using the endoscope.

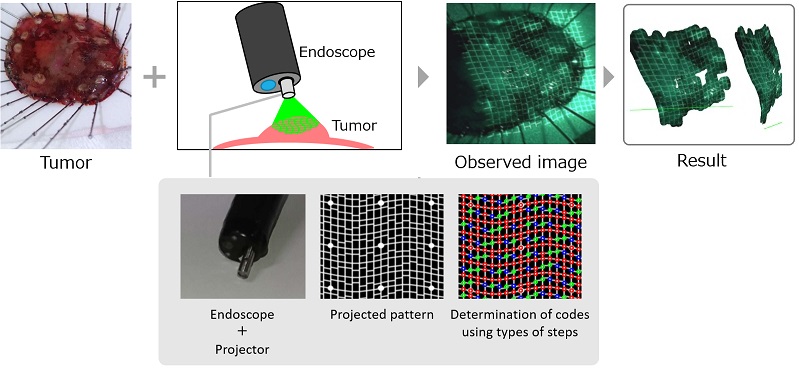

In order to perform more accurate measurements, development of a 3D endoscope system based on the active stereo method in which a light projector is attached to an endoscope camera is under way.

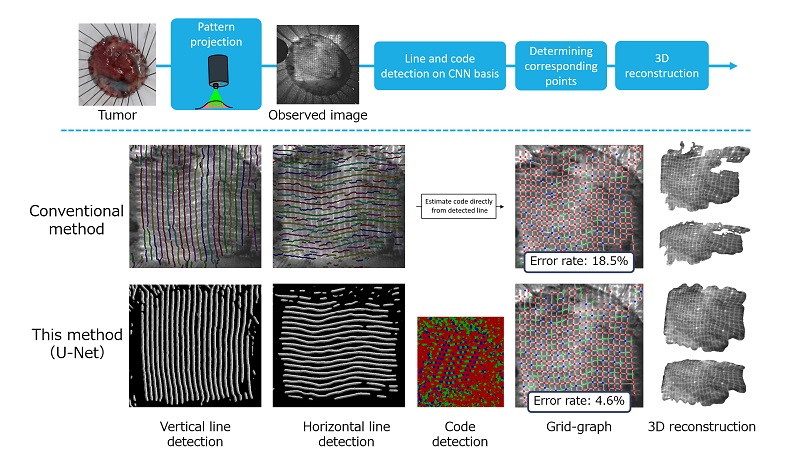

As a problem of the conventional method, the success of the 3D reconstruction depends on the stability of the projection pattern feature extraction from the image captured by the endoscope camera.

In this research, more stable feature extraction is performed by revising conventional image processing based feature extraction to learning based method based on full layer convolution FCN(U-Net).

In learning-based feature extraction, U-Net exercises to extract lines from vertical grid lines and intersection and code extraction from horizontal grid lines,

We successfully apply these to stable three dimensional reconstruction of living tissue such as tumor.

v

3D endoscope system.

3D endoscope system.

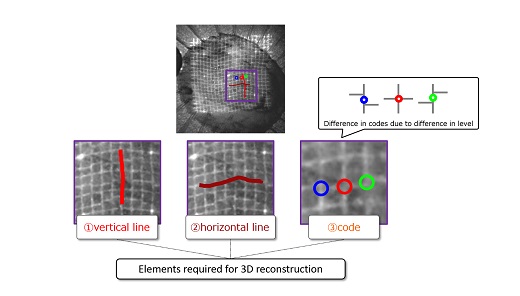

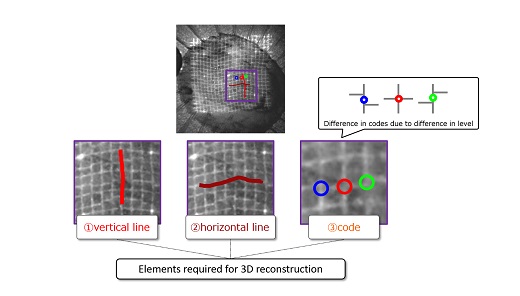

Pattern features necessary for 3D reconstruction.

In the active stereo method, the 3D position of the object is calculated by taking the correspondence between the grid pattern projected on the measurement target object and the captured observation image,

It is necessary to stably perform the feature extraction of the projection pattern in the observation image.

In this system, as a projection pattern of the active stereo method, a lattice pattern with a stepped difference robust to blur etc. is used,

By fitting three types of regularly arranged steps at each intersection accurately, it can be connected to three dimensional reconstruction.

Projected pattern features to be extracted.

Projected pattern features to be extracted.

Learning and extraction of learning-based features

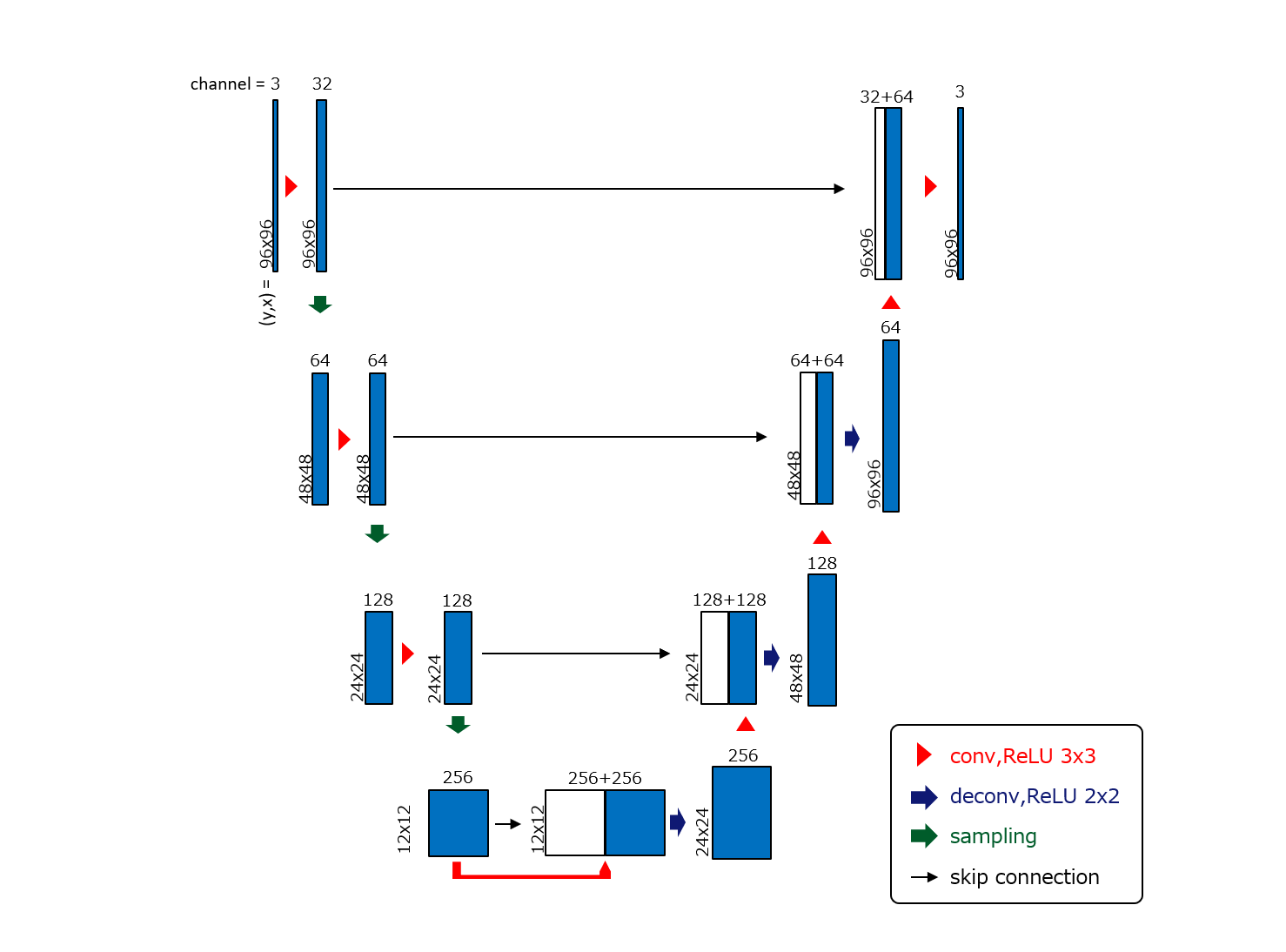

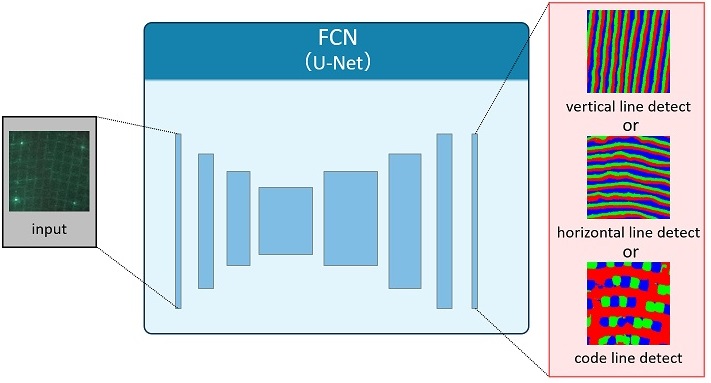

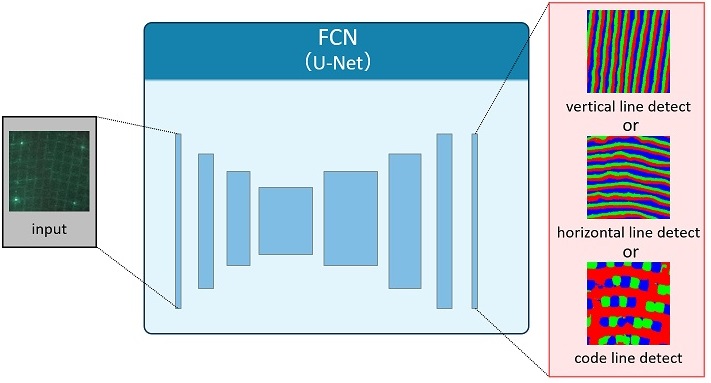

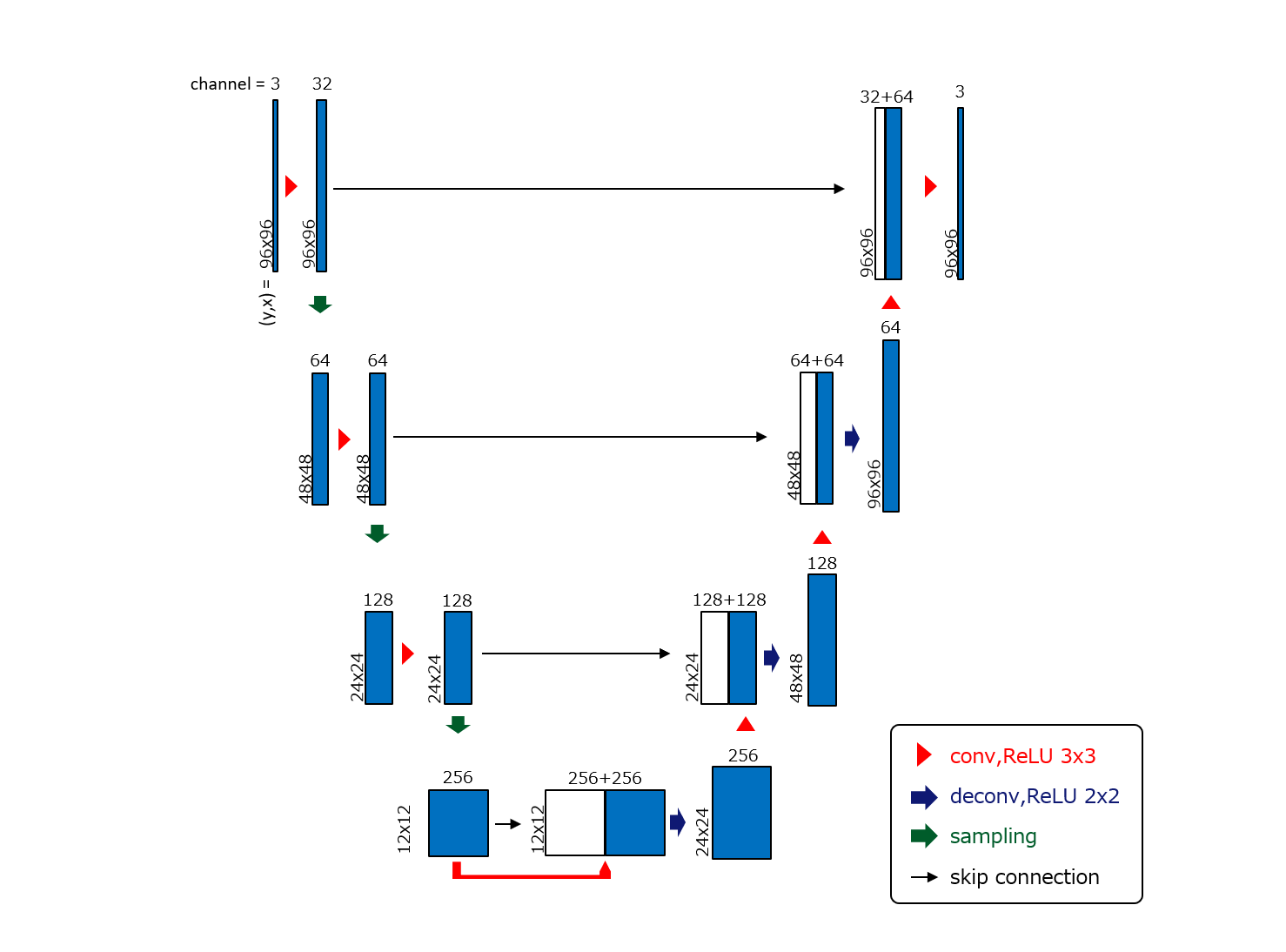

Fully-Convolutional Network (FCN) is a neural network in which all layers are composed of convolution, and one type of U-Net is mainly used in medical image segmentation.

In implementing U-Net in the feature extraction system of 3-dimensional endoscope,

In line extraction, "Line area (right or upper side)" "Line area (Left or lower side)" "Outside line area"

In code extraction, learning is performed by applying three kinds of steps to each of three labels.

When creating a teacher image of a line, divide it into two labels after giving the line area a width.

By this processing, learning efficiency can be increased by moderating the change in the feature amount, and disconnection of the outputted line can be suppressed by setting the boundary between the two labels as the line position.

Example of learning data.

Example of learning data.

|

Projected pattern feature extraction by U-Net.

Projected pattern feature extraction by U-Net.

|

U-Net architecture used.

U-Net architecture used.

Measurement of human carcinoma resectioned section.

In order to demonstrate the effectiveness of the proposed method, a pattern was irradiated on a resected section of a cancer tumor in a human, the endoscope was photographed, and feature extraction was performed using learned U-Net.

Decoding and 3D reconstruction result.

Decoding and 3D reconstruction result.

Publications

- Ryo Furukawa, Masahito Naito, Daisuke Miyazaki, Masashi Baba, Shinsaku Hiura, Hiroshi Kawasaki, "HDR image synthesis technique for active stereo 3D endoscope system.", International Conference of the IEEE Engineering in Medicine and Biology Society(EMBC2017), pp.1-4, 2017

- Ryo Furukawa, Masahito Naito, Daisuke Miyazaki, Masashi Baba, Shinsaku Hiura, Yoji Sanomura, Shinji Tanaka, Hiroshi Kawasaki, "3D Endoscope System Using Asynchronously Blinking Grid Pattern Projection for HDR Image Synthesis.", Computer Assisted and Robotic Endoscopy and Clinical Image-Based Procedures(CARE2017), pp.16-28, 2017

- Ryo Furukawa, Masaki Mizomori, Shinsaku Hiura, Shiro Oka, Shinji Tanaka, Hiroshi Kawasaki, "Wide-area shape reconstruction by 3D endoscopic system based on CNN decoding, shape registration and fusion.", OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis(CARE2018), pp.139-150, 2018

|